No audio available for this content.

Essence

Artificial intelligence (AI) has become part of the daily lexicon, and an endless stream of media reports assert that AI either has affected or will affect most aspects of human life. What is AI and what are its components? How is it being used in GNSS technology? What is the near-term potential of AI in GNSS/PNT? These are weighty, evolving questions for which this column attempts an initial synthesis.

AI definitions and descriptions vary widely. One general and broad definition from IBM (2025) is “Artificial intelligence (AI) is technology that enables computers and machines to simulate human learning, comprehension, problem solving, decision-making, creativity and autonomy.” The idea of thinking machines (Turing, 1950) and the term “artificial intelligence” were introduced in the 1950s (McCarthy, 2007). The 1960s and 1970s saw the development of neural networks. The 1980s brought advances in neural network training and deep learning. The 1990s saw rapid advances in computing power. Big data and cloud computing developments in the 2000s allowed for the management and analysis of large datasets. The 2010s brought deep neural networks/deep learning, and the 2020s have seen the introduction and flourishing of large language models.

This column primarily focuses on the impacts that AI is directly having and could potentially have on GNSS hardware and PNT solutions, including receiver signal acquisition, measurement processing, position estimation, integrity and mitigation of jamming and spoofing. Due to space limitations, it will limit discussion to topics such as GNSS-based sensor fusion, navigation system routing, application-specific customizations, etc., all of which are undergoing significant AI-related infusions. A suitable guide to consider is the list of tasks for which evolving AI approaches can outperform existing methods in meaningful and efficient ways. For example, in error modeling or optimal estimation, can AI-based techniques fill gaps in non- or only partially-deterministic processes?

Essential

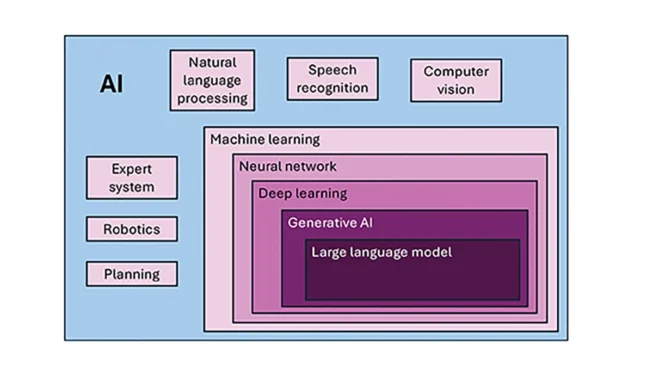

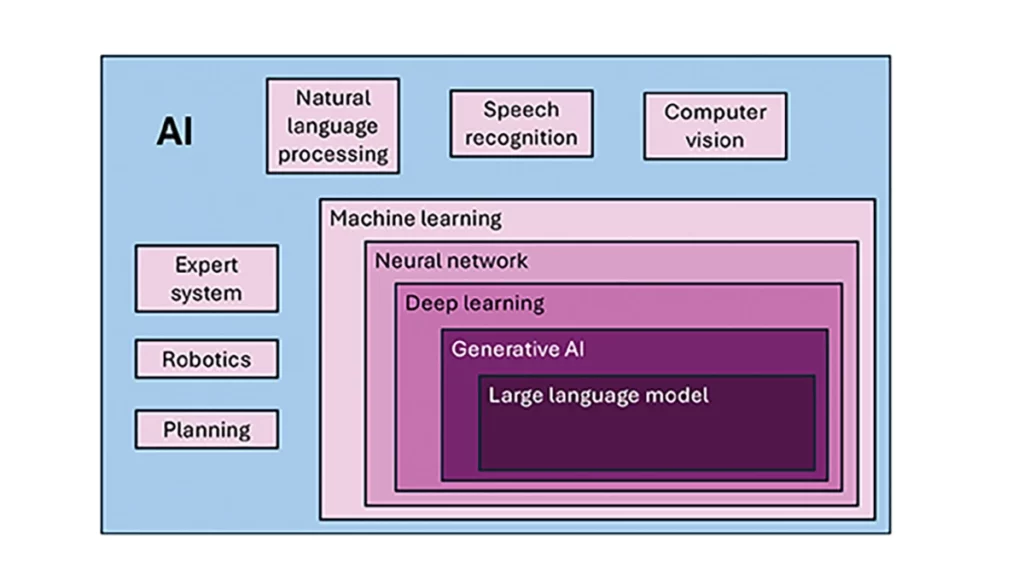

To investigate the current and potential uses of AI in GNSS, it is essential to define its components, especially as some terms are misused or conflated. The presented description is based on a wealth of Internet-based information, including from IBM (2025). Figure 1 illustrates the current broad concepts within, or subsets of, AI based on a synthesis of nomenclature used. In the figure, AI — defined here as a machine that exhibits human-like intelligence — is the superset. Within AI, there are many concepts or subsets that can be categorized, though they can overlap. There is perception intelligence, such as text and space recognition, and there is the broad area of machine learning.

Sophisticated processes have been developed and continue to rapidly evolve to give machines the ability to sense, learn and make decisions. Natural language processing (NLP) allows machines to recognize, understand and generate text following human language. Voice recognition is similar, in that the machine transcribes speech to text and back. Computer vision enables machines to interpret and analyze imagery. While robotics is a field of its own, within the superset of AI, it can be seen as an application of AI to motion. Planning refers to autonomously solving planning and scheduling problems. And expert system is the field of AI dedicated to simulating human expertise, judgment and behavior. All of these AI subsets are typically enhanced with machine learning (ML).

ML involves the development of algorithms and statistical models that can infer patterns (i.e., learn) from existing data without explicit instructions (i.e., rote training) and apply this knowledge to new data. Based on the learning approach, there are four types of machine learning algorithms: supervised, semi-supervised, unsupervised and reinforcement. (ML can also be classified by functionality.) Supervised learning uses manually labeled datasets to accurately train algorithms to classify data or predict outcomes. In semi-supervised learning and unsupervised learning, relationships are found with less or no explicit human interaction, respectively. Reinforcement learning combines these approaches with goal optimization. There are many types of ML techniques/algorithms, such as linear regression, logistic regression, decision trees, random forest, support vector machines, k-nearest neighbor and clustering, each designed for different types of problems and data.

Neural networks (NNs) or artificial neural networks are modeled after the human brain. A neural network model contains a given input layer and output layer, each with a set of nodes. These layers and nodes are interconnected with a set of hidden layers of nodes, with each node having a weight and bias, determined (i.e., estimated) based on the specified network inputs and outputs by utilizing one of a selection of optimization techniques. NNs can work well for tasks that involve identifying complex patterns and relationships given large amounts of data, though the details of specific parameter interrelationships cannot necessarily be determined by such models — therefore sometimes referred to as “black box” models. There are several types of neural networks, including convolutional NNs, long short-term memory networks, autoencoders, recurrent NNs, transformers, etc.

Deep learning refers to the depth of layers in a neural network. A deep learning model neural network contains at least three, but typically hundreds of hidden layers. Having many layers allows for unsupervised, fast and accurate identification of complex patterns and relationships. Generative AI can be described as deep learning models that generate new/original content, e.g., text, image or audio data through a variety of training, tuning and generation processes. Finally, large language models can read, understand and generate human language (refer to NLP), making use of all the functionality of ML.

Elements

How machine learning is used in GNSS

So, when should AI be used in GNSS/PNT tasks? A rudimentary answer is whenever AI can perform better (in some specified and measurable sense) than existing methods. The determination of this answer for a particular scenario requires research. From the descriptions of AI and its subsets, GNSS/PNT output is used in myriad AI applications such as sensor fusion, autonomous vehicle navigation, route planning, etc. However, it is primarily the ML subset of AI that is being researched for use in GNSS signal and measurement processing.

ML models can be categorized by their fundamental methodology, as either generative or discriminative, or by the tasks for which they are used: either regression or classification (IBM, 2025). Generative algorithms model the distribution of data points with the goal of predicting the joint probability of a data point appearing in a particular space, whereas discriminative algorithms model the boundaries between classes of data with the goal of predicting the conditional probability of a given data point being in a specific class. Regression models predict continuous values and are mainly used to determine the relationship between one or more independent variables and a dependent variable, whereas classification models predict discrete values and are mainly used to determine a category or class, e.g., binary or multi-class.

Siemuri et al. (2022) provide a comprehensive review of recent research (from 2020 through 2021) in which ML techniques are used in GNSS problem solving and provide a categorization of GNSS use cases. Relevant key findings include: 1) ML is proposed to increase GNSS/ PNT robustness under degraded signal environments; 2) more than 200 studies were assessed; 3) in most cases, the ML approaches outperformed (at varying levels of significance) the traditional GNSS models; and 4) industry adoption of ML in GNSS so far appears limited. The analysis found that neural networks were used in more than half of the studies (55%) — including some deep learning, while support vector machine and decision tree/random forest techniques were used in 19% and 10% of the studies, respectively. Use cases for machine learning in GNSS were categorized as: i) signal acquisition; ii) signal detection and classification; iii) Earth observation and monitoring; iv) navigation and positioning; v) denied environments and indoor navigation; vi) atmospheric effects; vii) spoofing and jamming; viii) GNSS/inertial integration; ix) satellite selection; and x) LEO satellite orbit determination and positioning.

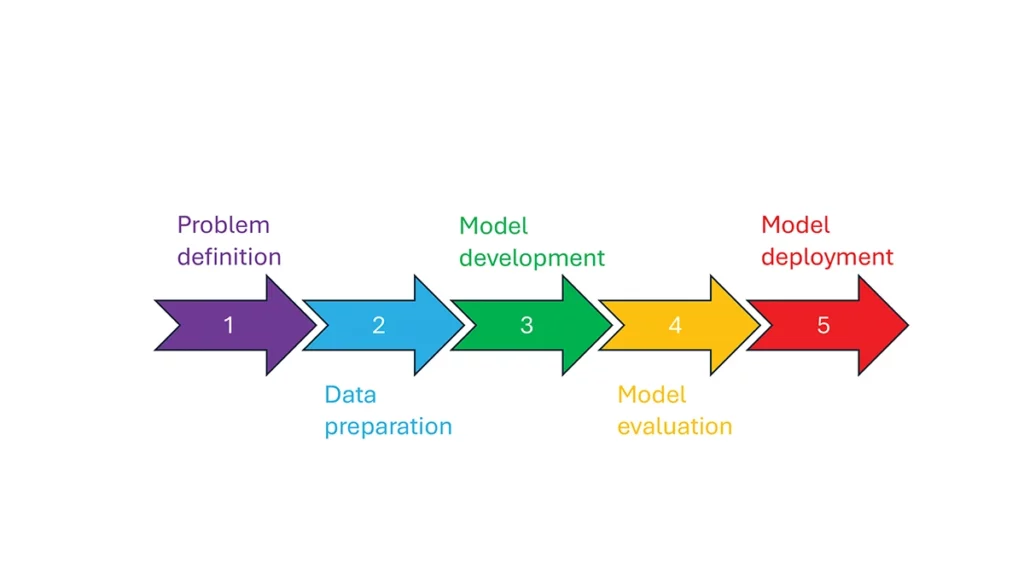

So, how is machine learning used in these GNSS/PNT use cases — and in general? How ML is applied can be described as a set of steps or a cycle with a varying number of components. Figure 2 presents a graphical synthesis from the literature, with a grouping of five core steps.

- Step 1 — problem definition: understanding the problem(s) and goals, defining the available data, defining the problem inputs and outputs, determining the category of ML to use and selecting evaluation metrics.

- Step 2 — data preparation: collecting the data, editing them, and labeling them if employing supervised classification.

- Step 3 — model development: selecting the algorithm, selecting the model, building the model and training the model.

- Step 4 — model evaluation: validating the model, tuning the model, analyzing the results, cross-validating the results and applying the evaluation metrics.

- Step 5 — model deployment: finalizing the model, applying the model in prediction, and, if necessary, feeding back into the start of the cycle.

The scikit-learn (2025) library is a popular resource for Python-based ML information, tools and examples. An illustrative example of how ML can be used in GNSS for signal classification and measurement weighting is given by Li et al. (2023). The authors describe the process for designing the ML problem-solving scenario, selecting the models that are either of the regression or classification type and comparing the performance of many popular ML models to detect direct line-of-sight versus non-line-of-sight and multipath signals in urban environments. Note that most applications of machine learning in GNSS involve some form of supervised classification.

Initial and potential machine learning uses in GNSS

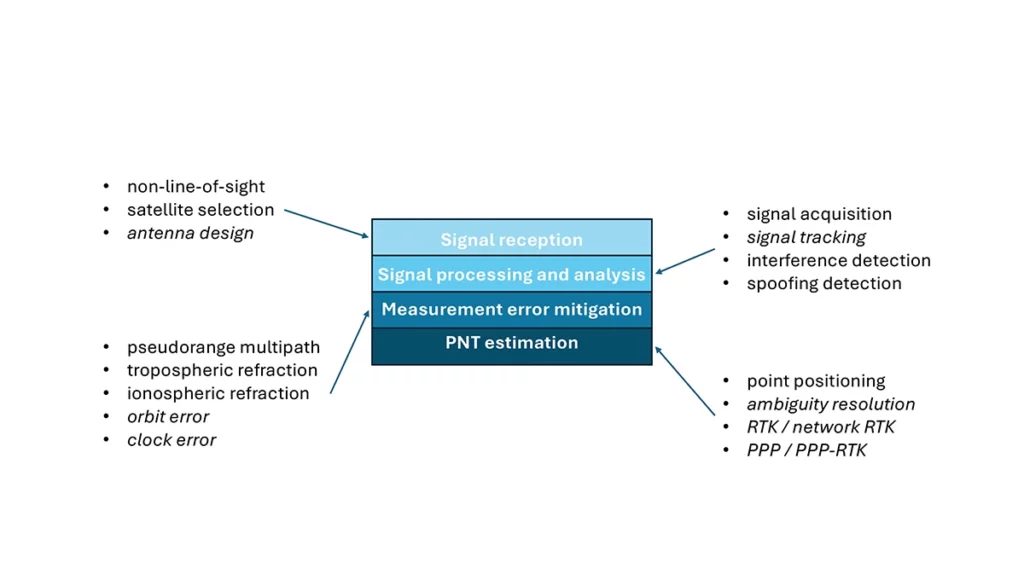

For this column, a brief synopsis is given of the use of machine learning in GNSS in the context of the application themes of signal reception, signal processing, measurement error mitigation and PNT estimation, as illustrated in Figure 3. Correspondingly, potential ML uses are also considered.

Signal reception

Studies including Tsu (2017) and Li et al. (2023) have used various machine learning models to differentiate between line-of-sight, non-line-of-sight and pseudorange multipath GNSS signals in urban environments. Various input features, such as signal strength, are used to train models, resulting in majority accurate classification. ML has been used to optimize satellite selection (rather than using all available tracked satellites) for efficient PNT processing. Radio frequency hardware and software simulators can use ML to improve the realism of propagated signals in various environments and under different dynamics, including multipath, interference and spoofing. There is also the potential for ML to be used to improve antenna design, including for controlled radiation pattern antennas that generate one or multiple nulls.

Signal processing and analysis

Deep learning models have been used for signal acquisition and show improvement over current methods with simulated data (Borhani-Darian et al., 2023). There may be potential for the use of ML in signal tracking or in the design of new tracking algorithms and processes. Studies have shown that ML can be used to detect natural and intentional radio frequency interference. Various ML models have successfully been used to produce accurate classification of radio frequency interference jammer types (e.g., Morales Ferre et al., 2019). ML has also been used to detect signal spoofing with simulated and real signals with high levels of validation (e.g., Semanjski et al., 2020).

Measurement error mitigation

As GNSS multipath is a non-deterministic (and non-zero mean) process, it is a strong candidate for machine learning-based mitigation, especially meter-level pseudorange multipath (compared to centimeter-level carrier-phase multipath). Such studies, combined with non-line-of-sight classification, have been described in the previous section.

Initial investigations of the use of machine learning in the mitigation of tropospheric refraction appear promising (e.g., Łoś, et al., 2020). The wet tropospheric delay on GNSS signals is irregular, making it difficult to predict. Therefore, there is great potential for improved anomaly detection, refraction modeling and more accurate severe weather nowcasting.

As with tropospheric refraction, ionospheric refraction, while well understood, is difficult to model accurately, especially during periods of high solar activity. Machine learning has been shown to accurately detect anomalies and scintillation (e.g., Linty et al., 2018) and potentially for nowcasting.

There is the potential to improve GNSS satellite orbit and clock estimation with ML, as these are both well-defined processes, but also contain levels of process uncertainty. For example, it is usual to include once-per-orbital revolution empirical accelerations in orbit estimation states, and satellite force models can always be improved. Consequently, ML studies may aid in such GNSS network processing to improve the accuracy of real-time and post-processed correction products.

PNT estimation

Well-established optimal estimation techniques such as least-squares and Kalman filtering work extremely well for most GNSS/PNT estimation cases. However, hardware limitations and environmental conditions can lead to measurements not meeting the technical assumptions of these conventional approaches, e.g., the use of independent measurements, the absence of systematic errors, the absence of gross errors, the use of realistic measurement variances, etc. Deep learning models have the potential to improve GNSS point positioning (e.g., Kanhere et al., 2022) in test data, if poor model numerical conditioning, changing satellite visibility and model overfitting are managed. There is potential research in the use of machine learning methods to improve carrier-phase ambiguity resolution, and in the centimeter-level positioning techniques of real-time kinematic (RTK)/network RTK, and precise point positioning (PPP)/PPP-RTK.

Broader AI/ML use within GNSS-based PNT

Clearly, GNSS/PNT outputs are used in a broad spectrum of applications, for which AI and ML are currently being used or have the potential of being used to attain and enhance goals. Machine learning has been used to improve GNSS-derived position time series analysis for many Earth science applications, including in plate tectonics, tsunami monitoring, vulcanology, subsidence monitor, GNSS reference station monitoring, overall measurement integrity, etc. and in diverse GNSS-enabled techniques such as radio occultation and reflectometry (Siemuri et al., 2022).

ML has the potential to allow for improvements in sensor fusion, chief amongst these being GNSS/inertial measurement unit (IMU) integration. Improvements can be found in IMU calibration and in managing functional and dynamic mismodeling for specific user applications. Wider, multi-sensor fusion, such as for simultaneous location and mapping solutions, rely heavily on ML approaches, such as reinforcement learning.

Finally, GNSS-based PNT is used in most of the non-ML subsets of AI. GNSS-based position information is central to many outdoor robotics, planning and computer vision algorithms, providing either seeding localization information for other sensors or processes, or core position information for the overall AI-driven system.

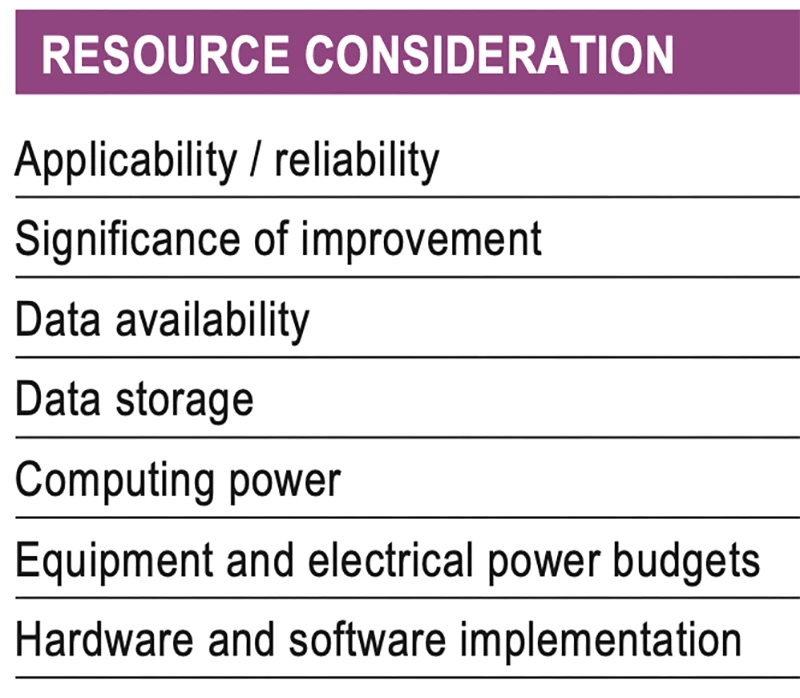

Machine learning resource considerations

As with all technology, a cost/benefit analysis is required when considering the application of ML in a specific GNSS use case. Table 1 summarizes the broad considerations. Can the problem at hand be reliability mitigated with ML, in the sense that there are complexities that are difficult or impossible to physically model, but sufficient patterns in the data to be modeled by ML? If ML can outperform a conventional approach using specified metrics, is the improvement significant to the user? Are there large enough, i.e., sufficient and varied, datasets to train a model for prediction over expected data variations? As most ML algorithms require large amounts of computing storage for large datasets, typically from data servers, can the necessary computing power be brought to bear? Similarly, given that most ML algorithms require large amounts of computing power for myriad computational operations, typically utilizing graphics processing units (GPUs), is such computing power available? As storage servers for large datasets and GPUs for processing are expensive and require large amounts of electrical power, are the financial and electrical power, environmental and security resources available? And finally, how practical is it to implement the ML model on user equipment or via servers?

Evolutionary

AI is a broad field that is rapidly developing and entering service in most technologies. While AI includes many subsets such as computer vision, natural language processing and robotics, the ML subset (which includes neural networks, deep learning and generative AI) has the most direct applicability to GNSS/PNT. Of the available ML models used in GNSS, most are supervised (i.e., they use labeled training data), and the majority use neural networks. Initial studies of applications such as signal classification and interference detection indicate that supervised ML models perform better than traditional approaches.

Many subsets of AI, such as computer vision and robotics, rely heavily on ML, while GNSS/PNT has only recently seen investigations in ML use. For many applications, it can be that conventional deterministic models, physics-based models or optimal estimation techniques work well and reach desired performance standards. However, as GNSS/PNT continues to trend to lower cost hardware, harsher environmental conditions and increasing safety-of-life usage, PNT outliers and corner cases grow in importance, and ML can potentially provide solutions, as outlined in Figure 3. These are the early days of investigating and applying ML in GNSS/PNT. To use ML or not to use ML — that is the question. There are many factors to consider, as described in Table 1. Performance improvements over current approaches and operational practicality (i.e., costs) will dictate ML adoption. Much more research is required in many GNSS/PNT applications, followed by significant wide-spread testing and tuning of developed ML models. It is difficult not to predict the near-term adoption of ML in at least some GNSS/PNT use cases, if they will benefit our daily lives. Look for future columns that will examine and investigate ML implementations in specific GNSS/PNT applications that prove its efficacy.