No audio available for this content.

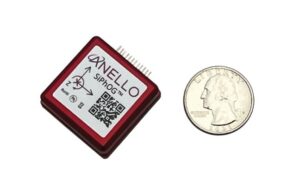

An exclusive interview with Kirstin Schauble, Director of Systems Engineering at ANELLO Photonics. Read the full story and additional exclusive interviews here.

What crops are we talking about? What positional accuracy do they require? What accuracy can you achieve?

Where ANELLO has been of use in agriculture, typically we’re talking about orchards or dairy farms, because these are the use cases where you have limited open sky view, or, in some cases, no open sky view. For the orchard case, you have high-value crops, such as almonds or walnuts, where you’re driving your tractor in between these very narrow rows with trees completely covering the sky above you. So, in this case, there’s hardly any GPS availability and certainly no RTK-level GPS, which poses a challenge for autonomy in these types of agriculture environments.

Some of the stricter position requirements are on the order of 20 cm to 30 cm, which is approaching RTK-level accuracy. You can’t achieve this with just standard GPS, even under open sky. In those cases, you really need RTK-level GPS and open skies before you enter the orchard’s GNSS-denied area. As you know, RTK gives you 2 cm accuracy.

Then you need to have a dead reckoning capability that can keep you within a 20 cm to 30 cm error. This is typically posed as a cross-track error. Errors in the direction of the distance traveled are slightly less important because you can tell based on visuals when you exit a row. However, while you’re in the row, you don’t want to run into a tree, so that cross-track error is important.

Plus, you can use wheel odometry for the direction of travel.

Yep, exactly. We also found a lot of success in those cases where you have our optical gyro technology plus the wheel speed odometry. Without the wheel speed, you’re relying heavily on accelerometers. Growers need small and cheap systems. They cannot afford to pay $100,000 for a reference-grade system. So, they are probably going to use MEMS accelerometers, which is what we use.

So, wheel speed aiding is extremely important to maintain that distance traveled.

So, the positional accuracy requirement is typically 20 cm to 30 cm and you can achieve that with your system.

Correct.

How much does your application cost? Is it installed at the factory, at the dealership, or by the grower? What’s the learning curve for the grower?

Our main innovation has been in the gyroscope space. Early on in our development of silicon photonics optical gyros, we realized that the main use for them is in navigation for autonomous platforms — such as cars, UAVs, and autonomous boats. Our gyro isn’t something that we can just sell into a market like agriculture on its own. Tractors typically just have a GPS input, then the autosteering system takes care of the rest.

So, our job is to replace that GPS input with our INS input. It uses GPS under perfect open sky. However, when you don’t have those conditions, it integrates the optical gyro and performs high-end dead reckoning, also using the wheel speed odometry, and our advanced sensor fusion engine. We have put years of pretty smart brains towards getting that engine fine-tuned, specifically for the high-end gyro that we have.

It’s very difficult to be an algorithm engineer where you have just a MEMS gyro, MEMS accelerometers, and no real speed data. There’s not much you can do with that. But if you have the high performance of optical gyroscope technology, it enables you, as an algorithm engineer, to dead reckon very accurately without GPS. Then you integrate all that and feed it into the GNSS input on the auto steer system.

Who does that integration?

We do all the inertial measurements internal to our system. We read in the wheel speed. We do all the sensor fusion with GPS. Then, we output the tractor’s position, which is far more accurate than it would be just using GPS. So, we do everything that a typical GPS receiver would do, and we send out a position, velocity and attitude.

Who does the integration depends on whether it is a retrofit or built from scratch. If you are, let’s say, John Deere, and you own the entire autonomy stack within this tractor, then you can take our input, add cameras, maybe add a lidar, and you can have your own fusion of those sensors. We have our own sensor fusion with inertial measurement units and GPS. The tractor’s autonomy stack can do the sensor fusion with our output and other visual sensors, such as cameras and lidars. That’s typically what a full autonomy stack might look like.

However, some farmers just have standard, manually driven tractors but want to know where their workers are and want to document exactly which rows were sprayed, because if you skip a row pests can find their home in those trees and then spread from there. So, if you miss a row, it’s as if you had not sprayed the entire acre or the entire orchard. It’s pretty high stakes.

Also, they often drive at night. It’s a difficult job. They might have to go refuel, then come back and start on the wrong row.

The distance between rows is going to be a lot more than 30 cm, so you don’t need that accuracy to identify a row.

Totally. I’m getting into the autonomy use case, for which you need that 20 cm to 30 cm accuracy.

For the documentation use case — where you just retrofit a tractor with our technology — all this data is saved, then maybe they download the file to their laptop and see exactly which rows were sprayed. Maybe they see that a few rows were missed, so they can go back and spray them the next morning. We found that human error, such as missing a row or double-spraying one, is a big problem. It is true that, for that purpose, you don’t need 20 cm to 30 cm accuracy, but you’d really like to have at least 1 m to 2 m accuracy, which you would not get under heavy canopies without some sort of dead reckoning.

So, you feed the position, speed, and attitude from your integration into the pre-existing input that was made to receive a signal from a GNSS receiver?

Yeah, that’s exactly right.

Is it standard NEMA messages?

Many of these autonomy systems are basically hard coded to accept these messages straight from a GPS source. We put our inertial solution into these messages so that they can be easily ingested by pretty much any autonomy stack that would be using GPS. It’s a simple plug-and-play to exchange someone’s GPS receiver with our INS solution. Obviously, they need to do some testing to optimize placement, installation and stuff like that, but in terms of interfacing, it’s a plug-and-play switch.

You just used the pronoun “they.” So, who is “they”? Do you have privileged relationships with some of the large manufacturers, such as John Deere? Do you sell your box to dealerships, who then sell it to farmers? Or can farmers order it directly from you and plug it in?

Great question. Unfortunately, I can’t give names of customers, but typically the earliest entryway into this market is companies that can easily adopt new technology and who are innovating very heavily in the autonomy and ag space. There are several players in that arena and we’ve found a lot of success there. Many companies are retrofitting existing tractors with an autonomy stack. There are companies that do retrofitting. They take commercial-off-the-shelf (COTS) systems such as ours, or a lidar or a camera, and retrofit a tractor. That’s the business model for some companies.

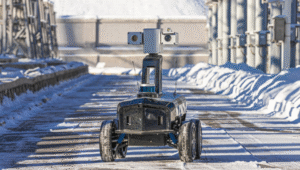

Others are building, completely from scratch, an autonomous robot that is performing certain tasks, such as spraying or harvesting inside an orchard.

In both of those cases, those who buy your products are integrators who build and sell systems, not dealers or end users.

Yes, they either build systems or retrofit tractors. The dealership is a very good option, because the manufacturers of these tractors have skin in the game for staying ahead on autonomy. They want their tractors to be known as having the highest tech. So, for a dealership to offer our state of the art, autonomy-enabling technology would be a huge benefit to the company.

We have field applications engineers involved — either training dealerships to retrofit or with the integration. So, we might be involved in that integration. So far, however, the earliest market for that has been with companies that are integrators themselves.

When you’re talking about autonomy, are you talking about totally autonomous machines that roam the fields without a driver in the seat or about steering tractors that still have drivers to monitor them and can intervene as needed?

My understanding of how autonomy transforms an agricultural machine is that you start with manual drivers and then move toward autonomy. It is similar to the addition of advanced driver assistance systems (ADAS) to passenger vehicles. Eventually, you have no driver.

I would imagine that farmers who own a tractor and are trying to retrofit — or, maybe, a company like John Deere that sells tractors — might start off with an autonomous tractor that still has a steering wheel and a seat but eventually could just drive itself, once that trust is gained. Trust is the key word. For many of these farmers, it’s hard to go from everything being completely manual and in their control to fully trusting autonomy.

In some cases, that trust can be gained through full testing, over and over again, and continuous improvements. It’s possible for a farmer to go from fully manual to fully autonomous immediately, but I imagine that there’s a transition period during which tractor manufacturers are still adding steering wheels while also integrating more and more autonomy in their tractor systems.

In the agricultural environment, besides INS, which sensors — such as radar, lidar, cameras, and odometers — are most useful? For example, for obstacle detection.

Besides knowing where you are, the most important thing in autonomy is obstacle detection. If a dog runs into the orchard, you want to be able to stop. You can do this with either lidar or radar, which are both depth sensors.

Cameras, plus AI, enable you to identify objects.

You would want a stereo vision camera, so that you can tell the how far the object is. Every company has a different approach to this. Ideally, you have every sensor, but it’s a cost of computation tradeoff. Everyone takes that tradeoff differently, but it’s some combination of cameras, lidars, and radars.

Do you integrate other sensors — such as lidar or cameras — with your inertial systems?

We just do the inertial navigation part and the others integrate.

Every time you acquire the GNSS signals after an occultation, such as under heavy canopy, you re-initialize the INS, correct?

When you start up the system, to know where you are you need to have GPS signals. So, the best scenario would be to start up the system under open sky. However, we’ve found through working with our customers that this isn’t always the case. An autonomous tractor might just stop spraying for the night in the middle of a row and continue its mission the next day. So, they sometimes need to start up under heavy canopy, which means that they wouldn’t be able to acquire GPS signals, at least not accurate enough. In that scenario, we’ve added a functionality to boot up with your last position. What customers often do with this feature is to constantly save their last position and heading from our system; then, as soon as the system boots up, they can decide whether to start from their last known position.

We leave that up to the customer, because there are some cases in which you might boot down the system and then boot it up and it’s somewhere else — for example, often an autonomous tractor will get towed to move it a long distance. If we just always booted it up with its last position that would cause issues in those scenarios.

So, there are always these edge cases. That’s why we have this feature where the system itself will determine whether to boot up with its last known position or with GPS.

Hopefully, that will become standard soon for car navigation, so that when you start driving from a parking space the system already knows which way you are facing and will guide you accordingly — rather than you having a 50/50 chance of starting out in the right direction!

Yeah, exactly.