No audio available for this content.

Anyone who has had to carry cumbersome legacy surveying instruments over rough terrain can probably confess to daydreaming about having something like Mr. Spock’s “Tricorder,” a small device that captures everything about your surroundings. Such sci-fi devices anticipated the “reality capture” wave of the present day.

Multi-sensor stacks have become the norm and are essential for many geospatial applications. Consider vehicular autonomy. For safety-of-life considerations, no single positioning technique could suffice. Each sensor type has strengths and weaknesses. Lidar has good behaviors under certain conditions: radar, GNSS and inertial measurement unit. That is why a typical autonomy stack will include one or more sensor types. More data of different types is better, especially if it can share a common, highly precise spatial reference. Surveying and mapping are benefiting laterally from the research and development for autonomous solutions. In some ways, autonomy solutions have benefited from the long-standing legacy of research and development in geomatics applications.

Platforms, Positioning and Progress

As surveying and mapping practitioners, we are bombarded with news and ads for a seemingly endless parade of the latest reality capture devices: handheld SLAM scanners, drone payloads, mobile mapping systems, backpacks and more. Many are using OEM sensors from a relatively small subset of manufacturers; this trend is similar to the wave of third-party GNSS rovers a decade ago.

While the individual sensors deliver astounding data, integration into field capture devices often shortchanges one crucial element: positioning. There is a tendency to keep cost, size, and power consumption low by including positioning components (e.g., GNSS and IMU) originally developed for mass-market applications like vehicle autonomy. Not every handheld SLAM system can afford to add survey-grade capabilities, like that delivered by dedicated surveying rovers costing tens of thousands of dollars, so users need to have realistic expectations.

A surveyor might wince when they hear a marketing claim from yet another SLAM device manufacturer claiming, “centimeter precision, anywhere!” Even with top-tier survey rovers, skill and experience temper such expectations. But what if the platform of a highly capable, survey-grade rover, packed multiple data capture sensors? This idea is not new, and the evolution of rover-based solutions in many ways, enabled the development of the current wave of reality capture systems.

Positional Integrity Through Motion

It is a prospect that might seem counter-intuitive: deriving a precise position while moving the instrument. Providing tilt compensation for a GNSS survey rover (on a pole/rod) had long been desired. The impetus was to improve efficiency, namely by removing the time spent leveling the rod for each observation. Freeing the user from the tyranny of the bubble was one goal, but it was also the first crucial step in being able to enable further sensor integrations.

Integrity through motion is one of the foundational elements of GPS/GNSS: the trajectories of the navigation satellites can be predicted with high confidence. For example, frequently updated ultra-rapid orbit products utilized in many GNSS solutions rival the “precise” orbit products we used to wait days for. Similar principles apply to modern tilt compensation solutions. The movement of a rover head provides a highly predictable reference trajectory for the orientation of the tilt sensors.

Electronic bubbles and tilt compensation have been around for many years. For example, compensators are standard in many instruments, such as total stations, and tightly coupled GNSS+IMUs in mobile systems for road, sea, and airborne mapping. Consider the SPAN system from Hexagon | NovAtel. Such systems compute centimeter-grade positions for moving cameras, lidar units, and other sensors, while taking into account heading, speed, pitch, yaw, roll, and in the case of marine systems, heave — at highway speeds, plowing through rough seas, or zipping across the sky. The challenge, though, was miniaturization. Could such capabilities be developed to fit into a standard survey rover?

Tilt compensation in rovers began to appear over a decade ago. For example, in some JAVAD rovers of the day; other manufacturers were soon to follow. These were at the time, unfortunately, magnetic-oriented systems. While a noteworthy achievement, the inconsistencies inherent to magnetic reference and cumbersome calibration routines soured a lot of users to the idea of tilt compensation.

This changed with the announcement by Leica Geosystems at the October 2017 INTERGEO international exhibition and conference in Berlin of the no-calibration tilt-compensated Leica GS18 T GNSS rover. This was one of the first “stacked rovers,” spawning a chain reaction of similar feature integration across the industry.

“The main thing we wanted to do was to make measurements faster for the user,” said Bernhard Richter, vice president of product management geomatics at Leica Geosystems, when I interviewed him at the time of the GS18 T launch. The process was part of a 10-year initiative to improve field efficiency, but it was not the sole goal. Dynamic precise positioning was the first step to enabling the integration of additional features.

After a year or so of end users getting over the skepticism of tilt compensation, you will now find it as a standard feature on nearly all new GNSS rovers. Of course, users need to verify and build confidence in new solutions. Surveyors of a certain age might remember when nervous party chiefs would require taping/chaining of distances to verify if those “new lasers” were working right.

Adding Sensors

GNSS in sky-view-challenged environments was the impetus for the first multi-sensor integrations. Namely, how to get the shot under the adjacent canopy, or roof overhang. While manufacturers and integrators had been offering various ranging lasers as peripherals GNSS rovers since the late 1990s, the Achilles heel was the orientation; limited to magnetic references. Despite this handicap, such offset point solutions were popular for certain applications (e.g., asset inventory, trail mapping).

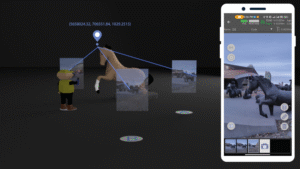

Once dynamic precise positioning was achievable in a rover head, it could become a platform for additional data capture sensors. Cameras were the logical first step. A camera included on some JAVAD rovers was leveraged by some users for photogrammetric computation of offset points. Leica Geosystems soon followed up its GS18 T, with the GS18 I, which added camera-based offset point capabilities. Rather than some early photogrammetric offset point solutions where you took individual static photos, with the GS18 I, you capture a series of precisely spatially registered images while in motion. In the companion Leica Captivate software, you can quickly identify the offset points. The same images can be processed in the software as a 3D point cloud, which many users do.

Again, we started to see camera-based offset point (and stakeout) solutions in nearly every brand of GNSS rover. What’s next? We’re starting to see lidar added to some rovers. But at what point should a field user reach for a dedicated reality capture device, rather than trying to do everything with an enhanced GNSS rover? There’s a lot to consider, so we asked some integrators for insights.

Eric Gakstatter, principal and owner of Discovery Management Group, has been promoting multi-sensor at conferences for decades. His firm also has developed and consulted on such sensors in conjunction with various manufacturers. Gakstatter highlighted some successful sensor integrations.

“There’s a difference between, say, those lidar SLAM devices and rovers,” he said. “With one, you are looking for a point cloud, but with the other, you are more interested in discrete points of interest; you’re very deliberate in measuring specific features.” Gakstatter had recently given me an overview of the Eos Positioning Systems Skadi GNSS rover, a next-generation receiver from the makers of the Arrow GNSS receiver systems. The Skadi features a built-in antenna and full tilt compensation, but also can be paired with a companion smart handle that offers some interesting multi-sensor capabilities. First, there is a “virtual pole” feature that wirelessly measures the distance straight down to the ground, eliminating the pole for certain applications. Another feature is a ranging sensor with a visible laser pointer for offset points and stakeout. “You can pull the trigger and trace along a curb line, collecting points in a continuous mode,” said Gakstatter. “It’s literally point and shoot simplicity.”

His firm has also developed, in partnership with another manufacturer, an angle encoding peripheral that mounts on the pole. When used in conjunction with a rangefinder-style laser, the GNSS rover can perform traverses in much the same manner as a surveyor’s total station. In a field demonstration for a utility services company, the crew did such a traverse along a roadway, then under canopy, in the shadow of tall buildings, inside a covered parking garage, and then closing back on the beginning outdoor points. This process would have taken much longer with conventional instruments.

While a strong advocate for multi-sensor integration, Gakstatter points out some of the challenges. “It just takes so long to get the right technology, the right packaging, the right power consumption, and then the manufacturers might need years to incorporate it,” said Gakstatter. “You can talk about these future technologies, but it just takes so long. Think about it, why haven’t the iPhone or the iPads become more rugged? Why can’t they make a replaceable battery? You know, all kinds of technology could be done in consumer devices, even multi-frequency GNSS, but they don’t do it. It’s driven by power consumption, real estate inside, size and cost.”

“I think you’ll find that some folks who just are going to go after the low-end sort of basic RTK receiver and some simple integration,” said Gakstatter. “But, you’ll always have the manufacturers that are sinking a lot of time and money into research and development, the pioneering stuff. But it is very expensive and for a relatively small market.” When new solutions are developed and integrated by those willing to invest in research and development, users gripe about high prices for the gear, but the payoff is a major increase in productivity and safety. Sure, such features might show up in lower-price-range rovers, but with varying degrees of performance.

Rover Lidar

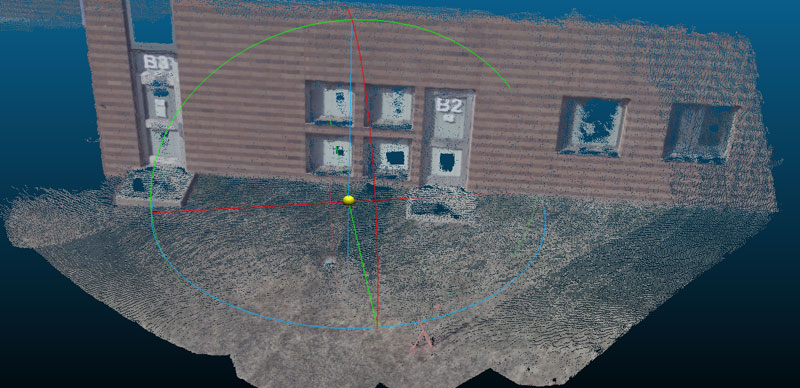

Lidar can be quite powerful for creating 3D point clouds and models. However, doing a formal scanning campaign with a large-format laser scanner is not always justified. Even if a site is scanned, surveyors might still need to shoot key features with a total station and/or GNSS rover. This practical reality is why limited scanning capabilities (and imaging) were integrated into some total stations. For example, Trimble SX12 total station and the Leica Nova MS60 Multistations. As such instruments provide, by default, highly precise spatial references, any of the selective scans are automatically registered. Users find this aspect highly attractive, capturing rich 3D point cloud data, and images, without the need for extra registration steps.

It was inevitable that GNSS rover manufacturers eventually would seek to add lidar scanners. In what might be one of the first, if not the first such integration (in broadly distributed commercial rover), CHC Navigation (CHCNAV) has its RS10 rover. It is a fully functioning high-performance GNSS rover but with a puck-style scanning head (16 channels or 32 channels for 320K or 640K pulses per second) mounted vertically, offset under the antenna. RTK and PPK workflows are supported.

It can operate as a rover or as a SLAM scanner. It should be noted that the scanner head is of the same quality as similar OEM heads on many SLAM handhelds, small mobile mapping systems, and even drone payloads.

According to Logan Zhou, mobile mapping business director at CHCNAV, when the RS10 was released in 2024, it brought a substantial boost to its mobile 3D sales. I asked what the fundamental differences were between this enhanced rover and a conventional SLAM mapping instrument.

“Compared to a traditional SLAM solution, we have integrated a high-performance receiver and antenna,” said Zhou. “So, the users can acquire the results with geographic coordinates directly.” He added that there are three cameras integrated into the RS10, for image capture and point cloud colorization. There are elements of visual and lidar position stabilization, that are also leveraged in what they call SFIX, to extend positioning capabilities into GNSS challenged areas.

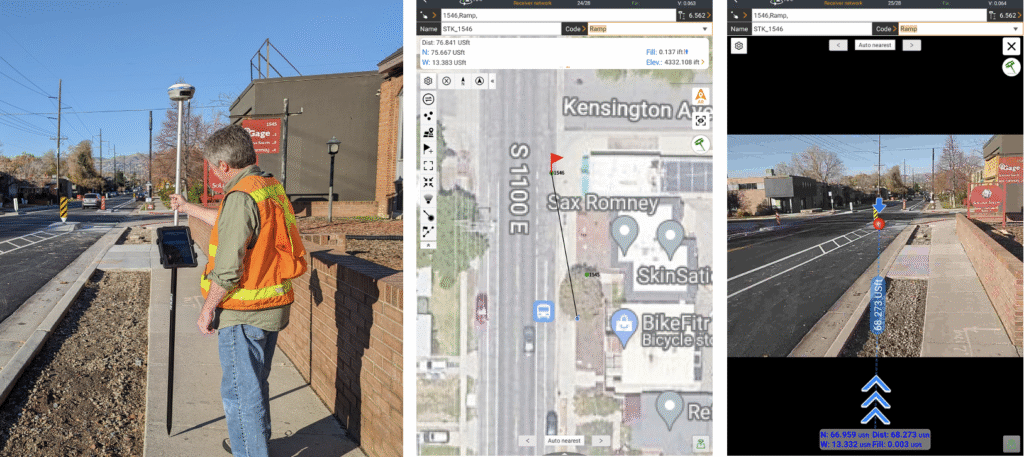

Matt Sibole, PLS, owner of iGage Mid-Atlantic, part of the popular iGage surveying equipment sales and support network, has several RS10s in his rental inventory. He finds the unit very capable as a reality capture system. Sibole also related that his customers are also very pleased with the CHC units that have camera-based offset and stakeout capabilities, like the i89 and i93 (that have forward and downward tilted cameras for stakeout). The i89 and i93 point cloud capabilities utilizing photogrammetry are also quite intriguing to many of his customers.

One consideration of adding a high-precision SLAM scanner to a rover is weight. When using one for standard rover point capture for extended periods, the added weight can become a burden. Sibole prefers a different topography survey workflow. “I’ll go through with my CHC i89 rover, and pick specific points I need, as a check,” said Sibole. “I’ll shoot center and rims of manholes, shoot some property corners, etc. Then, I put that away and grab the RS10. With a connection source from my base, and I set it up for NTRIP, I can just walk around the site and collect the lidar.”

We discussed the growing trend for reality capture systems to have multiple deployment configuration options. You will see some compact systems that can be handheld, mounted on chest or backpack harnesses, attached to vehicles, and even carried as drone payloads. The one element that does, unfortunately, sometimes gets shortchanged is the GNSS component. As I alluded to earlier, there are low-cost GNSS boards that might be suitable for coarse mapping applications, but if you are looking for engineering design or construction quality models, quality makes a big difference.

Cameras Abound

Cameras are relatively small, light and low-cost. They have been a logical integration for at least some models of nearly all GNSS rover brands. Nearly a decade ago at an INTERGEO exhibition, I spoke with Winston Wen, CEO of the then relatively new brand of Tersus GNSS. Wen predicted a great future for multi-sensor GNSS rovers, noting that no-calibration tilt would be the crucial first step, which Tersus would integrate into their line of rovers.

Next, he wanted to develop enhanced rovers for users who could benefit from not having to pack a bunch of different instruments into the field, especially in remote areas, like for his customers in the Australian outback. Terus now has Trek, essentially a version of their flagship high-performance Oscar rover, but with an integrated camera.

“I think photogrammetry could overtake lidar, for many applications, as the sensor fusion gets better, the algorithms get better, and the actual physical hardware gets better,” said Jesse Huff, surveyor, geospatial thought leader, and general manager of Tersus in North America. “Even in the literary world, you know, a picture is worth a thousand words. There’s a lot more information to be gleaned from the images and the stuff that we’re doing with point clouds. The feature recognition revolution is just getting started.

“The point cloud that we’re getting out, I won’t say it’s as clean as some lidar data,” said Huff. “There’s a little bit of noise in that, but it rivals lidar data for most of what folks are doing, save for structural mechanical work.” The camera-based offset point and stakeout workflows of different manufacturers vary. Tersus offers the users three approaches. One is to initiate the automated image capture sequence by picking a target object in the controller, then it will proceed to look for the same point in subsequent images, to perform the triangulation.

Another is to take the image sequence and pick points in two or more images in the controller. Huff joked that the old-school surveyor in him prefers the latter option, but notes that a feature recognition algorithms improve, the process in such systems will become even more automated. One more option is to create a point cloud from the images and then select points from that.

One characteristic of legacy close-range photogrammetry, that is the source of lingering doubts about its utility, is the cumbersome processing workflows (of the past). Huff pointed out that the creation of the point clouds from the Trek is performed on the data controller, like their TC40. The same Tersus field software can run on an Android tablet or phone.

“I’ve done point cloud generation with Tersus Nuwa field software using my phone,” said Huff.” I’ve got a Samsung Z Fold5; it’s got a better processor, a quad-core processor, than the first computer that I was using for point cloud registration and processing.

That brings up a good point, noted Huff: “When you talk about sensor stacks, it’s not just sensors, it’s technology stacks. It’s not just taking advantage of the tech that’s in the receiver, it’s also taking advantage of the tech that has evolved in terms of mobile computing devices, artificial intelligence (AI), the cloud and more.”

GNSS Quality

For many indoor, GNSS-denied environments, there are reality capture systems that can produce, through visual and SLAM stabilization, highly precise 3D point clouds and downstream models. A good example is the BLK family of reality capture devices from Leica Geosystems. The Leica BLK2GO is a handheld SLAM device that scans up to 420,000 points per second. It employs a tight integration of an internal IMU, a unique camera-based progressive spatial reference technique, and SLAM stabilization (together dubbed “GrandSLAM”).

For indoor applications, the crucial reference is relative to the structure. Outdoors, a SLAM scanner would typically need terrestrial reference points for absolute spatial registration, and/or leverage GNSS. Here is where the quality of the GNSS could mean the difference between having to set many control points with other instruments (substantially adding to project costs), or an appropriate minimum.

This paradigm was of prime consideration for Looq AI, when they were developing their flagship handheld, camera-based reality capture system. The system is a harbinger of another trend (that would need a separate article), about how close-range photogrammetry is coming into its own, and with recent advancements and techniques, is becoming a serious challenge to lidar, and especially SLAM lidar. I compared point clouds for a small site, between that of the Looq handheld, and a large format terrestrial laser scanner — and could not see any difference. How does it do this? And what role did precise GNSS play in this?

The script has been somewhat flipped. In the case of one recent development, instead of adding cameras to a rover, high-quality GNSS+IMU was added to high-quality and rigorously calibrated camera-based reality capture handheld.

“From a technological foundation, we knew that for triangulating thousands of image features, which are represented by points in the real world, multiple optics were required,” said Dominique Meyer, co-founder and CEO of Looq AI. “We create a triangulation algorithm for the camera system that would provide robustness that is much greater than many of the photogrammetric single-camera solutions out there, and at the same time, exceed the point cloud density of many lidar point clouds. A precise spatial and temporal component is crucial to apply to the observations.

“If you look at computer vision and GNSS independently as two independent sensor stacks, GNSS is robust in very specific environments,” Meyer added. “You have the number of satellite observations, quality of observations, precise time, and a set of corrections. Only when those are all good do you have great GNSS localization capability. The second you lose any of that, because you’re in a forest or under a roof or a bridge, you don’t necessarily know how robust your GNSS localization is.”

This is why Looq decided to make post-processed kinematic (PPK) an integral part of their workflow; PPK is performed automatically for the user, in the cloud, before point cloud processing. “Fundamentally, we knew that post-processing would apply better corrections, because it enables a backward pass in the corrections, fundamentally always exceeding or equating the quality from an RTK solution,” said Meyer. “Because our computer vision algorithms always run after the capture, we knew we could afford to run a PPK service, which would also allow us after the fact to decide which correction services to use. If you have a local base, you can use that. If you don’t have a local base, you can use a public correction service. And if you don’t have that, you can use a PPP solution.”

Another key component was the choice of the GNSS and IMU components of the Looq handheld. “We considered many hardware options. We had a layout of pretty much every GNSS receiver at the time out there. What we cared about was multiple bands, especially the newer bands like L5,” said Meyer. As noted, there are GNSS boards for many different applications, and while some of the lower-cost solutions are “multi-constellation,” they may only support a limited number of signals per constellation. Being able to work in limited sky and high multipath environments was important; low-cost boards can struggle with this.

Looq chose components from Septentrio. “The other part is spoofing and interference,” said Meyer. “Not that it is super common, Septentrio has put a lot of effort into filtering to make sure that you don’t get third-party interference across the RF spectrum that would affect the quality of the GNSS. Tests we had completed demonstrated that with the multiple bands and with the RF reliability, it was the best, which would allow us to produce the best market product.”

Duality

I circled back with Bernhard Richter of Leica Geosystems, who shepherded the development of that pioneering stacked rover, the GS18 T. We discussed the question of GNSS rovers as a multi-sensor platform. Several key themes emerged from the conversation.

Surveying is, in many ways, reality capture, but it is handled in a different, more selective and focused manner. Features observed must often meet very tight precision and integrity expectations: property marks, control for engineering and construction, monitoring, and more. Reality capture is about mass data capture. While there are advantages for certain applications in the proximal richness of mass data capture, there always may be the need for discrete points.

“When we designed the GS18 T, we recognized a challenge in bridging the gap between single-point measurement and reality capture,” said Richter. “Our goal was to improve the efficiency of discrete point collection while maintaining survey level quality.” With tilt achieved, the later addition of camera-based offset point capabilities to the GS18 I, was simply to improve efficiency even further.

“One of the main concepts behind the GS18 I is that everything you capture through photogrammetry is immediately aligned with the same reference frame or coordinate system as your normal GNSS points,” said Richter. “This additional feature does not put any extra burden on your hardware, and if you work in the conventional way, it performs just as well as any other method.” While you can produce point clouds from the images, that was not the primary goal, but a nice feature for certain situations. This raised another consideration: is capturing potential excess data a wise move?

“You can use mobile scanners, or sensors on your rovers, and generate point clouds of everything,” said Richter. “Capturing massive amounts of data is easy from a hardware perspective. But then you have to process and use all of this data. Right now, the software side of the industry is playing catch up. There just isn’t enough automation yet, though AI will certainly help with that moving forward.” It is a common complaint in the geospatial sector that there are substantial backlogs in the office processing of reality capture data.

“Yes, you can scan everything and then have someone extract the needed data from the point cloud,” said Richter. “But depending on the application, your client might be better off with a more conventional survey of discrete points chosen in the field. That said, for capturing large areas or long road corridors, a terrestrial scanner, mobile mapping system, or drone may be the most efficient option.”

Those applications aside, I hear misgivings among surveyors about the “scan everything” approach. They often can meet the needs of clients with conventional topo, mapping right there in the field, and that reduces the office component dramatically compared to a scanning workflow. So, is there a compelling need (for now) to add mass data capture sensors to survey rovers? Perhaps not. As prices for reality capture devices, SLAM scanners, drones, etc., drop over time, they become part of a firm’s standard kit, along with a rover and total station.

“We’ve always been keenly aware of the technology hype cycle,” said Richter. “There’s the initial excitement, then a trough of disillusionment, but if a solution truly improves productivity, adoption comes later. We could have added magnetic oriented tilt compensation long ago, but recognized that we could not rely on the Earth’s inconsistent magnetic field. It could have resulted in a substandard product. It was best to invest our efforts into the type of tilt used in the GS18 T.” While I did not press for any details about what might be next for their research and development, I sensed that the subject of additional sensors for rovers would keep the same principal in mind.

“At the end of the day, you need to solve specific customer problems,” said Richter. “There’s a lot that can be done, but not everything adds efficiency in the way people might expect. Often, the downstream automation isn’t there yet to handle a flood of additional data. Integrating additional sensors can require tradeoffs, and in many cases, there may already be more efficient options available. Not every application benefits from a mass data capture approach.”

What If?

Manufacturers can be averse to revealing what they’re working on next but watching lateral developments in the world of geospatial sensors, certain moves might seem inevitable. We’ve seen how disruptive the addition of various types of Time-of-Flight (ToF) sensors, to consumer tablets and phones, has been. For certain (very short-range) applications, the point cloud quality from some phone sensors rivals, or even bests that of some lidar systems. There are also examples of highly refined ToF sensor implementations in reality capture devices, such as the Leica BLK2GO PULSE.

There has even been a wave of devices that allow you to attach your ToF-enabled phone to the handle, which also features an RTK-capable GNSS antenna and receiver. These have become popular, for instance, capturing utilities in an open trench before they are buried. One concern is that there is a tendency to skimp on the GNSS hardware. You only get to measure those pipes once. Why not get the best-quality data?

So, why not put the ToF sensors on a high-performance rover? Someone is bound to do it; it is just a matter of time. The same applies to other solid-state lidars. There are some types of what folks call solid-state lidar that have moving parts on the MEMS. But now we are seeing true solid-state lidar (no moving parts), for instance, that are integrated into mobile mapping and road inspection systems by XenomatiX. There are still some miniaturization and power budget issues ahead, but we will likely see solid-state lidar on rovers at some point, which would overcome the weight handicap of SLAM lidar heads attached to rovers.

Then we can look ahead to the near-sci-fi realm of quantum sensors. Read more on this subject at: gogeomatics.ca/quantum-surveying. Quantum technologies could someday upend imaging and lidar approaches, and other sensor types. For example, quantum radar could employ more bands on a single, smaller antenna than the large separate antennas required of conventional radars. Radar has particular advantages for foliage penetration. Imagine scanning a roadside with solid-state lidar, and then radar enhances what you can see under and behind the vegetation.

Relatively small quantum antennas have been demonstrated to detect the entire RF spectrum; these could be super-sensitive to specific bands and help mitigate multipath, spoofing and jamming hazards. On the subject of commonly mentioned vulnerabilities to GNSS, quantum magnetometry is showing promise for not only an alternate navigation approach but could act as a “canary in a coal mine” for GNSS applications, detecting offsets that would indicate spoofing.

Within the last month, a functioning and commercially offered quantum navigation device was announced, and it is nearly pocket-sized. Sorry for the geek detour, but there could be fascinating things ahead.

Some folks dismiss the prospect of a multi-sensor GNSS rover as being like a multi-tool, like a Leatherman or Swiss-style military knife. Super handy when you need it, but the individual tools might not ever match the utility of a dedicated tool. I’m among those who, to some degree, disagree with that analogy. Unless it is a case of some slapped together systems (with the lowest cost components possible), for many systems, the added-on features can boost productivity and deliver precise data (with careful and appropriate practices).

Sometimes, the zeal to stack rovers is not always successful. There was the case of a manufacturer embarking on an earnest initiative to build a sensor-rich add-on for a GNSS rover, which some envisioned as a way to match the capabilities of a total station. However, it appears that the initiative has been suspended.

There’s a lot for users to consider: do they invest in multiple dedicated instruments, and can they afford the best of each? But on the other hand, is the stacking of multiple sensors on a GNSS rover really warranted or even practical? Can combo systems effectively meet most of your needs? Do you consider fit-for-purpose solutions? The good news is that the progression of innovation in hardware and software is continuous. There are a lot of intriguing options already out there, with more to come.